Optical computers light up the horizon

Since their invention, computers have become faster and faster, as a result of our ability to increase the number of transistors on a processor chip.

Today, your smartphone is millions of times faster than the computers NASA used to put the first man on the moon in 1969. It even outperforms the most famous supercomputers from the 1990s. However, we are approaching the limits of this electronic technology, and now we see an interesting development: light and lasers are taking over electronics in computers.

Processors can now contain tiny lasers and light detectors, so they can send and receive data through small optical fibres, at speeds far exceeding the copper lines we use now. A few companies are even developing optical processors: chips that use laser light and optical switches, instead of currents and electronic transistors, to do calculations.

So, let us first take a closer look at why our current technology is running out of steam. And then, of course, answer the main question: when can you buy that optical computer?

Moore's Law is dying

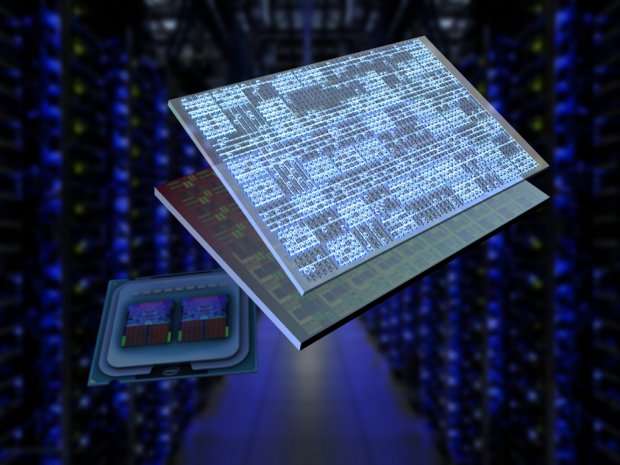

Computers work with ones and zeros for all their calculations and transistors are the little switches that make that happen. Current processor chips, or integrated circuits, consist of billions of transistors. In 1965, Gordon Moore, founder of Intel, predicted that the number of transistors per chip would double every two years. This became known as Moore's Law, and after more than half a century, it is still alive. Well, it appears to be alive...

In fact, we are fast reaching the end of this scaling. Transistors are now approaching the size of an atom, which means that quantum mechanical effects are becoming a bottleneck. The electrons, which make up the current, can randomly disappear from such tiny electrical components, messing up the calculations.

Moreover, the newest technology, where transistors have a size of only five nanometers, is now so complex that it might become too expensive to improve. A semiconductor fabrication plant for this five-nanometer chip technology, to be operational in 2020, has already cost a steep 17 billion US dollars to build.

Computer processor chips have plateaued

Looking more closely, however, the performance growth in transistors has been declining. Remember the past, when every few years faster computers hit the market? From 10 MHz clock speed in the 80s, to 100 MHz in the 90s and 1 GHz in 2000? That has stopped, and computers have been stuck at about 4 GHz for over 10 years.

Of course with smart chip design, for example using parallel processing in multi-core processors, we can still increase the performance, so your computer still works faster, but this increased speed is not due to the transistors themselves.

And these gains come at a cost. All those cores on the processor need to communicate with each other, to share tasks, which consumes a lot of energy. So much so that the communication on and between chips is now responsible for more than half of the total power consumption of the computer.

Since computers are everywhere, in our smartphone and laptop, but also in datacenters and the internet, this energy consumption is actually a substantial amount of our carbon footprint.

For example, there are bold estimations that intense use of a smartphone connected to the Internet consumes the same amount of energy as a fridge. Surprising, right? Do not worry about your personal electricity bill, though, as this is the energy consumed by the datacenters and networks. And the number and use of smartphones and other wearable tech keeps growing.

Fear not: lasers to the rescue

So, how can we reduce the energy consumption of our computers and make them more sustainable? The answer becomes clear when we look at the Internet.

In the past, we used electrical signals, going through copper wires, to communicate. The optical fibre, guiding laser light, has revolutionised communications, and has made the Internet what it is today: Fast and extending across the entire world. You might even have fibre all the way to your home.

We are using the same idea for the next generation computers and servers. No longer will the chips be plugged in on motherboards with copper lines, but instead we will use optical waveguides. These can guide light, just like optical fibres, and are embedded into the motherboard. Small lasers and photodiodes are then used to generate and receive the data signal. In fact, companies like Microsoft are already considering this approach for their cloud servers.

Optical chips are already a reality

Now I know what you're thinking around about now:

"But wait a second, how will these chips communicate with each other using light? Aren't they built to generate an electrical current?"

Yes, they are. Or, at least, they were. But interestingly, silicon chips can be adapted to include transmitters and receivers for light, alongside the transistors.

Researchers from the Massachusetts Institute of Technology in the US have already achieved this, and have now started a company (Ayar Labs) to commercialise the technology.

Here at Aarhus University in Denmark we are thinking even further ahead: If chips can communicate with each other optically, using laser light, would it not also make sense that the communication on a chip—between cores and transistors—would benefit from optics?

We are doing exactly that. In collaboration with partners across Europe, we are figuring out whether we can make more energy-efficient memory by writing the bits and bytes using laser light, integrated on a chip. This is very exploratory research, but if we succeed, it could change future chip technology as early as 2030.

The future: optical computers on sale in five years?

So far so good, but there is a caveat: Even though optics are superior to electronics for communication, they are not very suitable for actually carrying out calculations. At least, when we think binary—in ones and zeros.

Here the human brain may hold a solution. We do not think in a binary way. Our brain is not digital, but analogue, and it makes calculations all the time.

Computer engineers are now realising the potential of such analogues, or brain-like, computing, and have created a new field of neuromorphic computing, where they try to mimic how the human brain works using electronic chips.

And in turns out that optics are an excellent choice for this new brain-like way of computing.

The same kind of technology used by MIT and our team, at Aarhus University, to create optical communications between and on silicon chips, can also be used to make such neuromorphic optical chips.

In fact, it has already been shown that such chips can do some basic speech recognition. And two start-ups in the US, Lightelligence and Lightmatter, have now taken up the challenge to realise such optical chips for artificial intelligence.

Optical chips are still some way behind electronic chips, but we're already seeing the results and this research could lead to a complete revolution in computer power. Maybe in five years from now we will see the first optical co-processors in supercomputers. These will be used for very specific tasks, such as the discovery of new pharmaceutical drugs.

But who knows what will follow after that? In ten years these chips might be used to detect and recognise objects in self-driving cars and autonomous drones. And when you are talking to Apple's Siri or Amazon's Echo, by then you might actually be speaking to an optical computer.

While the 20th century was the age of the electron, the 21st century is the age of the photon – of light. And the future shines bright.

Provided by ScienceNordic

This story is republished courtesy of ScienceNordic, the trusted source for English-language science news from the Nordic countries. Read the original story here.